So…

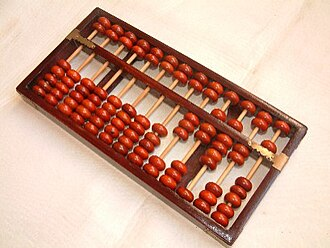

This is not analog computing by no means

How about

?

?/joke

Isn’t there already a special low-power part of phone chips designed to listen for wake words?

— with a little help from AI.

I call nullshit.

nullshit.

Relating this to carbon emissions is absurd. Your phone’s maximum power consumption is about 25W, of which sensors are a tiny, minuscule fraction. Running your phone at 25W for an entire year would allow you to drive a typical petrol car doing 40mpg for 250 miles on the same energy budget.

Reducing sensor power usage is good, but not for this reason.

There is a connection, but I don’t think it’s a satisfying one.

There’s some thought that neural networks would take less power consumption if they were on analog chips. So yeah, it’s for LLMs to get bigger. Reducing CO2 emissions by not doing LLM slop is apparently off the table.

Reducing CO2 emissions by not doing LLM slop is apparently off the table.

Not to be argumentative, but has this ever been something the consumer market has done with an emerging “core” technology? I don’t see how this was ever realistically on the table.

AI slop is an unfortunate fact of life at this point. If it’s inevitable, we may as well make it as not terrible as possible.

Nothing inevitable about it. People aren’t going to be running local models en masse; that will be about as popular as self-hosting Internet services. People are largely reliant on centralized datacenter models, and those will shut down as the bubble pops.

This is how I understood it too.

I’m wondering what combination of features would use 25w on a phone. On flagship models the battery would last less than an hour at that consumption (and might even melt :P).

Your point still stands by the way, sensors take next to nothing in terms of power. I guess the point of the article is perhaps the processing of the signals is more efficient with this hybrid chip? Again though in real terms it’s a nothing-burger in terms of power consumption.

The highest power draw phone SoCs are about 16w at peak, but they can only sustain that for a very short time before thermal throttling, certainly not nearly an hour. If you were displaying a fully white image on the screen at full brightness at the same time 25W could be possible. But that’s not really a realistic scenario

Plenty of phones only charge at 25W which is why I picked the figure :P

More efficient sensors mean better battery life, which is more likely what this is about.

*gasoline or diesel. You cannot use petroleum as it needs to be refined.

‘Petrol’ is british for gasoline. No one will be driving around on Vaseline.

Petrol and gasoline are the same thing, it’s just different terminology.

As cool as it sound at a glance, I fail to see the case they’re trying to build.

And of course, they have to sprinkle a little AI on it.

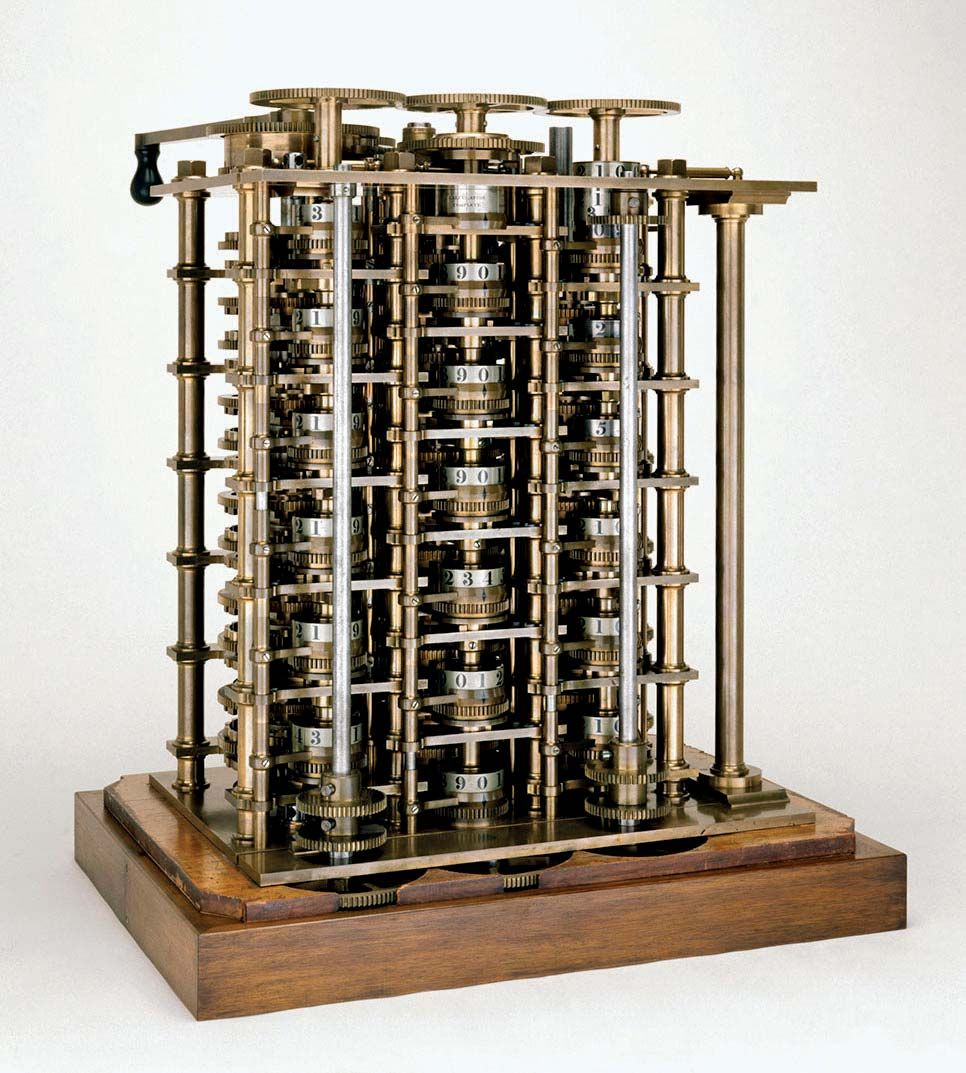

I would like more something like a GPU to become normal, except it’d be an analog unit for kinds of computation vastly more efficient this way, where you don’t need determinism. Some trigonometry and signal processing, perhaps even some of 3d graphics.

No, it isn’t. There’s just a passing interest in retro technology

It’ll pass

New, programmable analog chips that perform basic sound processing aren’t retro. The article is worth reading.

Or, if you can’t read, Veritasium did a video: https://youtu.be/GVsUOuSjvcg

This has nothing to do with retro technology. This is about thinking “is using binary really the most efficient way to run every computation we need to do?”, which is really relevant today.

Is it? Binary is not a “analog” vs “digital” thing. “Binary” existed in analog computing for a couple of centuries at least before the concept of “digital“ even existed.

It’s an abstract concept, not a specific application and while it can be specifically applied, there is no implication that it is either analog or digital. It could be either, both, or neither.

With “binary” I mean “has two states”, as in discrete, as in digital. You can represent binary bits using analog circuits, but it doesn’t make those circuits binary/digital. Likewise, you can represent continuous, analog functions using discrete logic, but it will always be an approximation. What makes these chips different is that they are able to not only represent but actually model continuous functions and values, like physical models.

I think perhaps you might’ve misunderstood my comment, because this is exactly what I was saying (well, part of what I was saying, anyway). You’re just being a lot more specific in your explanation.

I’ll try to be more clear in the future

No offense taken! I just believe that a subtle difference does not mean unimportant and wanted to be precise. I didn’t take you as someone who doesn’t understand analog and digital, especially considering your instance :) I edited my previous comment for some additional clarity. I just think they’re neat ^^

That doesn’t contradict anything above.

There’s a company pushing their hybrid analog/digital chip for real use cases. I dunno if it’s going to be successful, but it’s not retro.

@retrolemmyusername…hm…What the hell does that mean?

I think it means that retrolemmy is either an expert in what is retro or that they are very protective of their retro territory.

lol